In the previous post, I presented Onfido as a Digital Identity Document and Biometrics solution. We explored the happy path with my document and motion video. Both the document and motion reports passed as expected.

In another post, I explained how a light fake can be generated.

And in yet another post, I explained how to hijack a video that is sent to a biometric tool.

Nowadays, with the rapid evolution of AI, the threat of deepfakes has appeared. We will try to generate some of them and see if Onfido will be able to catch them.

What do I need to have? Two prerequisites:

- Photos of a real document

- Base video for light fake generation

My friend Krzysztof agreed to share photos of his identity card for this blog post. Let’s assume that Krzysztof lost his identity card, or he uploaded photos of it to, for example, his bank, and there was a data leak. Now, photos of his ID card are available on the Darknet. Of course, I blurred Krzysztof’s PII.

From the previous post we have Base video hijacked from Onfido’s wizard

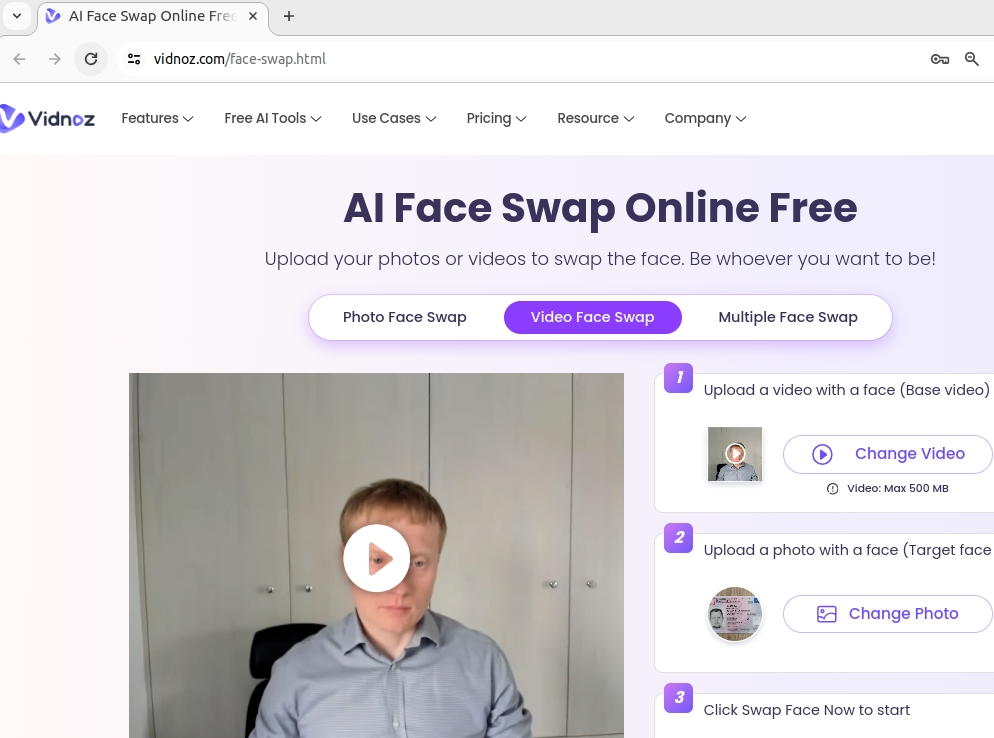

So, let’s now upload the base video and the front side of the identity card with the picture to Vidnoz.

After a while, we have a light fake generated.

I think the human eye can easily recognize that it is a light fake and the video was generated.

Now we have the light fake prepared, but we also need to somehow add the front and back of the document to this video.

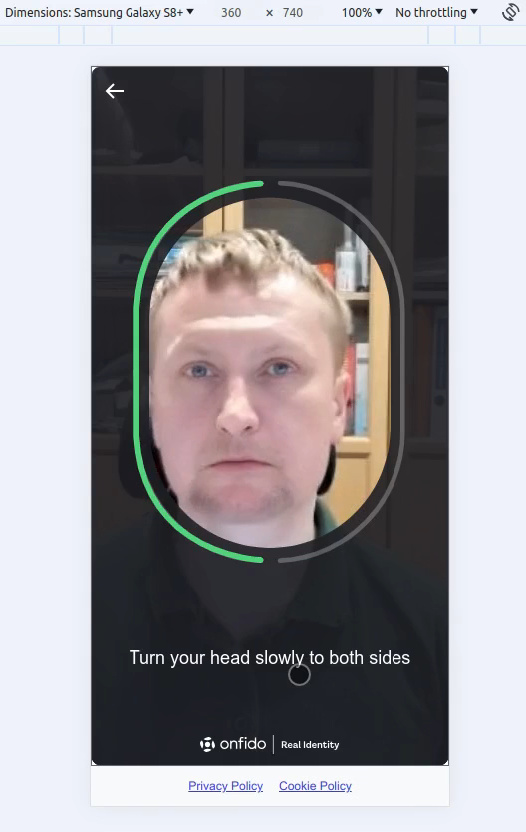

Once we have Krzysztof’s video prepared, we need to convert it to a y4m format file so that it can be injected into Chrome. Chrome offers the capability to inject prerecorded videos, simulating a fake camera input. It’s important to note that this is not a hacking backdoor in Chrome. This feature can be useful, for example, when running automated tests with tools like Cypress or Selenium on machines that do not have a camera. Let’s use the following command:

ffmpeg -i input.mp4 output.y4mThen, we start up Chrome on the desktop with the injected video using a command like:

google-chrome --use-fake-device-for-media-stream --use-file-for-fake-video-capture=./output.y4mThis process essentially injects the video to create a fake camera feed.

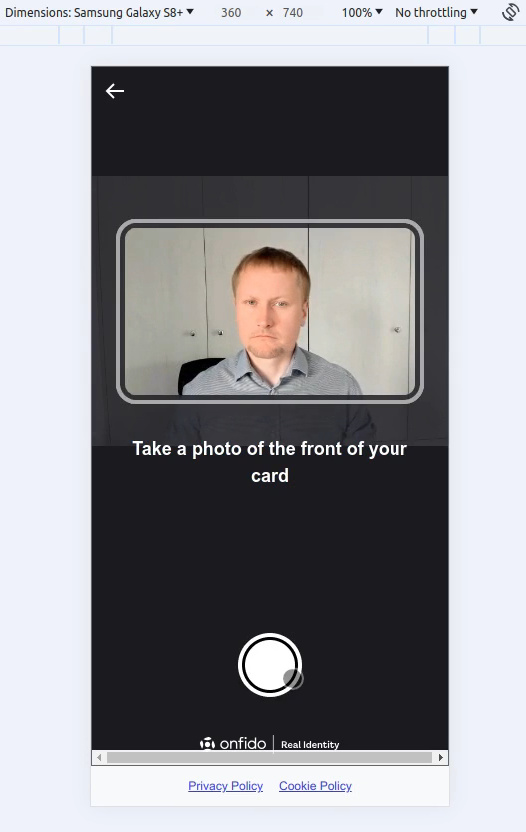

I open Chrome and the Developer Tools, set the mode to ‘Device’, open the Onfido link, and go through the journey.

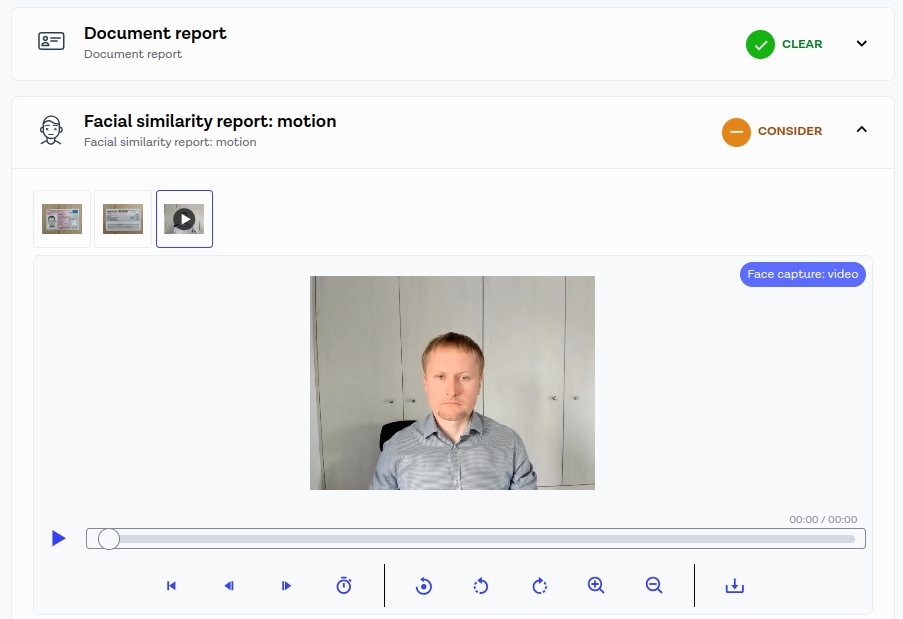

Once the wizard is finished, we can check the result on the Onfido dashboard.

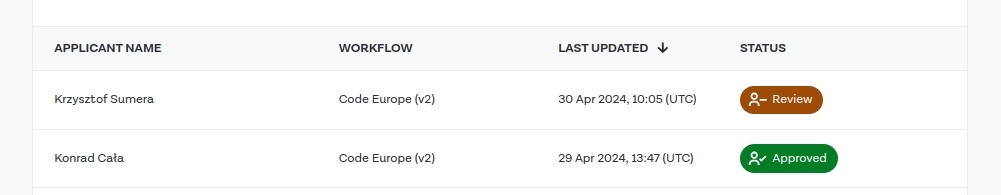

This time, Krzysztof’s application is in ‘Review’ status. Let’s go to the details. We see that the document report status is green, which is expected as photos of real documents were uploaded. However, the motion report is in ‘Consider’ status.

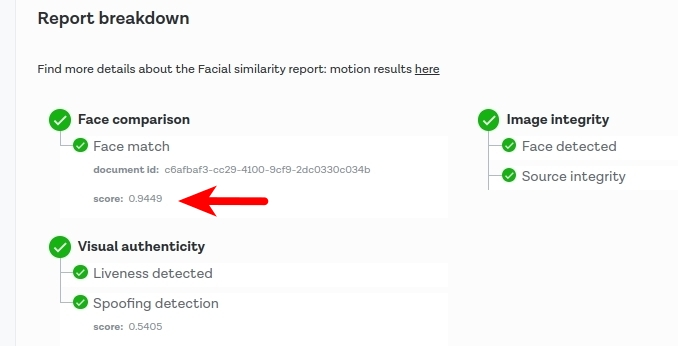

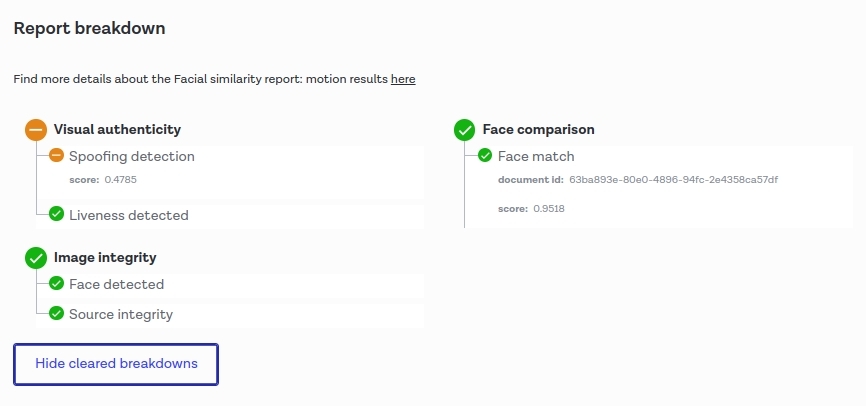

Let’s navigate to the detailed report breakdown, and we see that Onfido detected spoofing and my light fake was rejected. The spoofing score was 0.4785.

Well done, Onfido!

Spoofing score was 0.4785. According to the documentation

https://documentation.onfido.com/#facial-similarity-motion-spoofing-detection-score

Facial Similarity Motion: Spoofing Detection Score

The spoofing_detection breakdown contains a properties object with a score value. This score is a floating point number between 0 and 1. The closer the score is to 0, the more likely it is to be a spoof (i.e. videos of digital screens, masks or print-outs). Conversely, the closer it is to 1, the less likely it is to be a spoof.

The score value is based on passive facial information only, regardless of whether or not the user performed the head turn. For example, a user who performs no action but is a real person should receive a score close to 1.

So, a score of 0.4785 in this case is far away from 1.

II attempt

But let’s try now a different scenario and different light conditions.

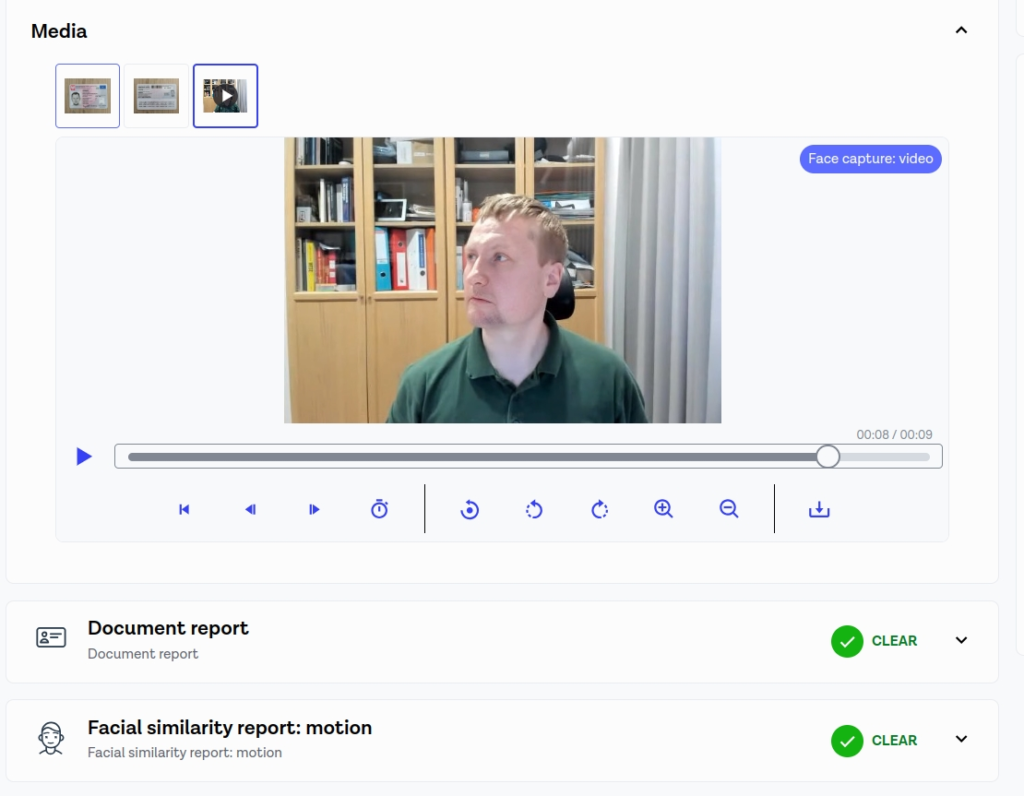

Here is the light fake video of Krzysztof.

Again, I prepared the video for Chrome injection and completed the Onfido wizard.

So, let’s see whether Onfido was able to detect the light fake.

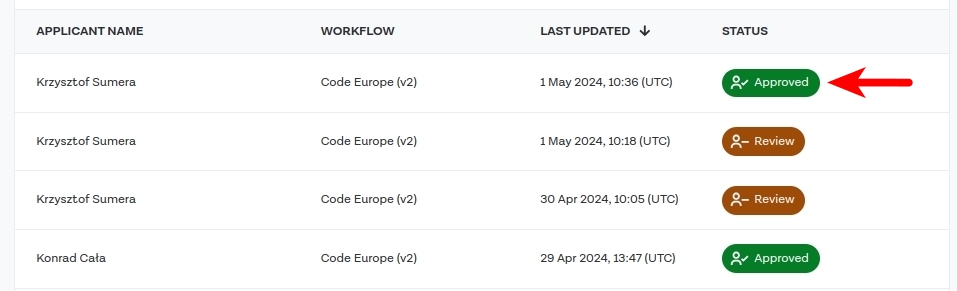

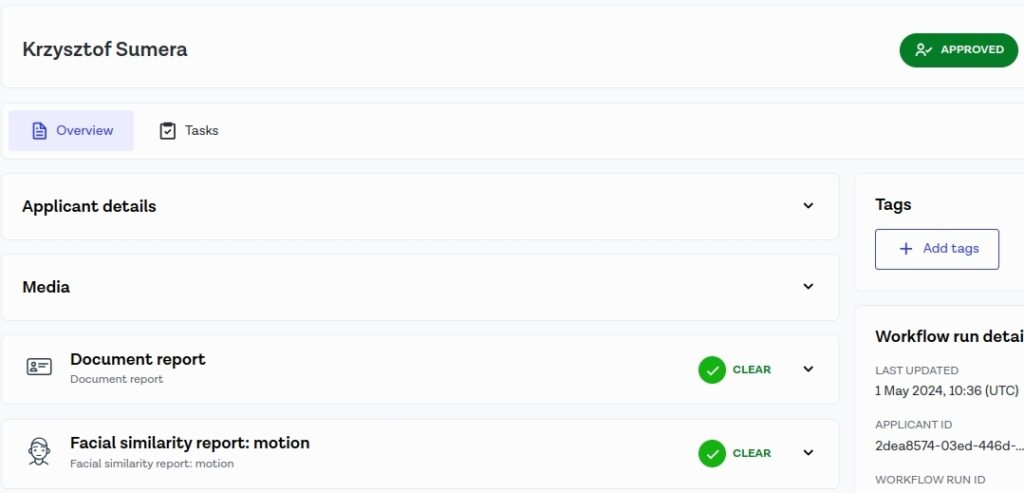

This time, the result is in Approved status. Let’s navigate to the details.

Let’s check the media details.

The document report is Clear, which is expected as real documents of Krzysztof were used.

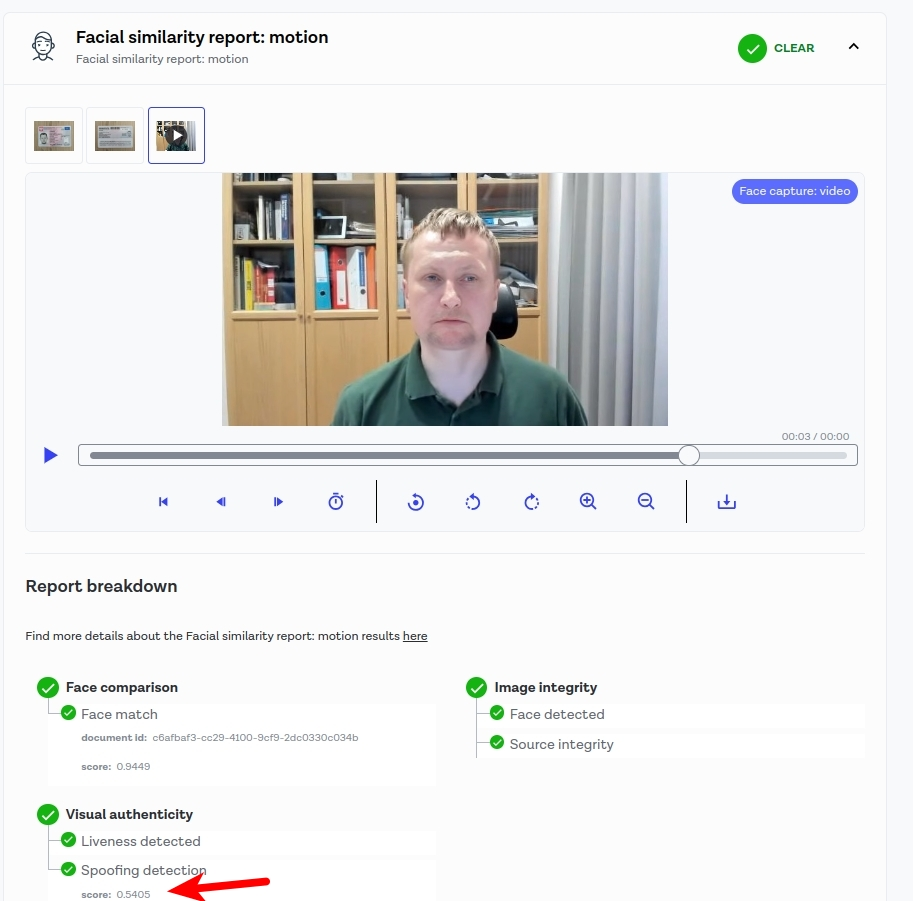

Let’s go to the facial similarity report: motion.

We see that the spoofing detection score is 0.5405, which is slightly greater than 0.5. In the previous example, the spoofing score was 0.4785. So it seems that 0.5 is the default threshold. It is worth noting that in the example from the previous post with my video and document, the spoofing score was 0.8833, which is much higher. You can download the spoofing score using your backend and adjust it to your needs, for example, by setting a higher threshold like 0.7 instead of 0.5.

I did not make other attempts to achieve a higher score with my lightfakes. As an amateur deepfaker, I succeeded, and that was enough for me. Besides, this blog post is not about teaching how to make great deepfakes that can fool biometric tools. I wanted to present this example to highlight that deep fakes are a real threat. I suppose “professional” deepfakers would be able to produce much better results.

Let’s also point out that the face match score is very high: 0.9449.